The Institute for Data-Intensive Engineering and Science (IDIES) hosted its annual symposium on Thursday, Oct. 16. The symposium opened with remarks from Alex Szalay – Bloomberg Distinguished Professor of Big Data and Director of IDIES – on the rapid evolution of data science and its expanding applications. Over the past 25 years, many scientific breakthroughs have emerged from unique data sets, including the mapping of the entire human genome through the Human Genome Project and the imaging of the universe and celestial bodies via the Sloan Digital Sky Survey (SDSS).

“We are living through a revolution with an unprecedented pace and agility,” Szalay began, highlighting how “half of the material that we are teaching in semester-long courses will be either wrong or outdated by the end of the semester.”

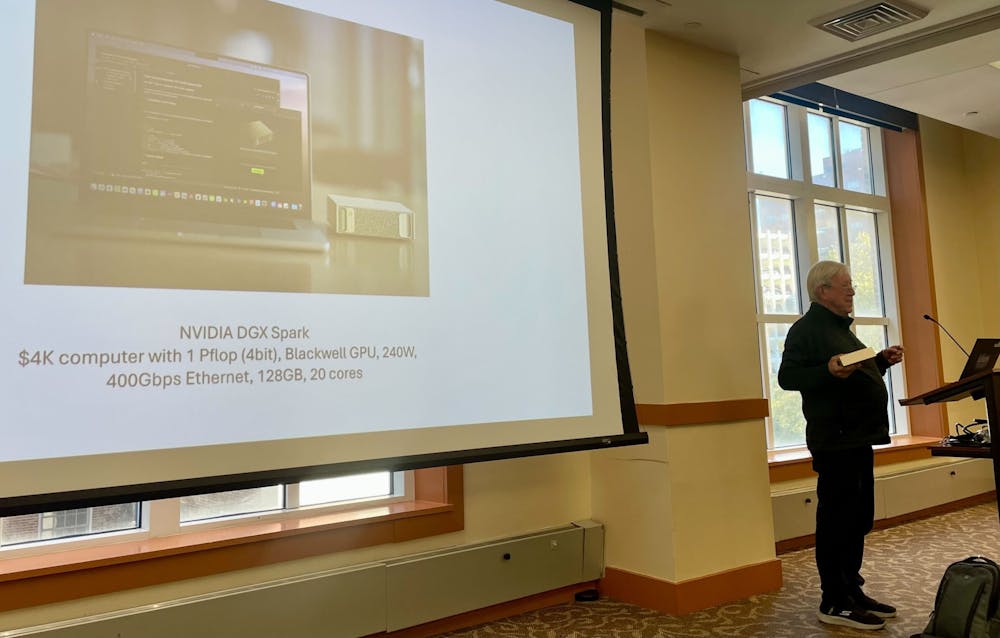

To demonstrate the incredible progress in recent computing technology, Szalay showed symposium attendees the newly-released NVIDIA DGX Spark. Weighing just 2.65 pounds and possessing a volume roughly the size of a book, the compact computer houses 128 gigabytes of coherent unified system memory, a type of memory in which the CPU and GPU can access the same data without redundant transfers between them. Using this system, it is powerful enough to run large language models such as OpenAI's GPT-OSS 120B and Meta's Llama 3.1 70B. 10 years ago, such high-level computing would only be available to engineers working painstakingly on a well-funded project. Now, a $4,000 credit card purchase is all it takes for a regular person to get their hands on this technology.

“I predict that each of these machines [will be able to] run a generative AI next to every microscope in the lab and filter the data on every telescope,” Szalay said, emphasizing the increasing interconnectedness of AI, data science and research at large.

Although high-power computing is, without a doubt, becoming increasingly widespread and democratized across the world through the internet and open-source platforms, Szalay argued that various factors lead to inequitable access to computing and its benefits. Considering this, Szalay concluded his remarks: “The future is already here. It's just not evenly distributed.”

After this opening by Szalay, keynote speaker Stuart Feldman, the Chief Science Officer and President at Schmidt Sciences, gave a talk titled “Scientific Software, Software Engineering, and Philanthropy.”

Currently, we have the tools to generate and collect large amounts of data at an unprecedented rate, but making sense of it – breaking it down into understandable, actionable insights – is another challenge altogether. Feldman emphasized the importance of software innovation in tackling this challenge.

“Scientific computing is the only way we understand anything about complex phenomena. Mathematics brought us great techniques for understanding linear equations. Unfortunately, the world ain't linear, and once you get non linear, you might as well just compute… Real engineering is needed. Code can last a long time,” Feldman explained. In fact, the computer software Make, that was hard-coded by Feldman himself nearly 50 years ago, is still widely used in Unix operating systems today.

Indeed, scientific software has enabled the analysis of massive datasets, the simulation of complex systems and the automation of repetitive tasks, which are essential for modern research ranging from astrophysics and space, genomics, biological sciences and climate studies to materials design. Later in the symposium, faculty and students presented data- and computation-intensive projects, which included a real-time model of the stomach integrating fluid dynamics and AI software.

Feldman emphasized the lasting impact of well-crafted software, noting, “Software for science is a real mix: mathematics, feedback loops, statistics and probability, crossed with data in real time. And it’s got to be correct.”

To exemplify this fact, Feldman shared an anecdote: “I have seen the difference between part-time graduate students who are pecking away to finish something and a real-world research project with full-time, professional engineers. The differences are horrifying… A basic rule is that a graduate student has only one job, which is to get out of there with one piece of paper in and one piece of paper out. Whereas, the code may live on for 50 years when done well.”

Although the explosive rise of AI may render some professions obsolete, the intuition, adaptability and experience of skilled engineers and problem-solvers will remain vital to the integrity of scientific advancement.

Feldman discussed, “At one side, there's software engineering for AI, the building of large model systems. This is heavy duty, very scientific computing. Even if you're doing this with a lot of delicate correction factors, there's unprecedented scale… you need, therefore, real engineering discipline that's going into validation of the computing. Nobody can check the answers other than us.”

While AI may offer an undeniable level of convenience across a wide range of tasks, it is far from a perfect solution to our problems.

“To what extent can AI do [tasks] for you? To what extent do you trust that it got the answer right? Why should it not be hallucinating your test results also?” Adding onto this, Feldman joked that “no AI system will I trust more than an experienced plumber.”

To conclude his talk, Feldman captured a central insight: “This is the mass implication. How do we get software written? How do we get answers created that are credible and prompt?"

The challenge is ours to tackle – depending on the thoughtful development of software, the responsible use of AI and the thorough analysis of data. The next breakthroughs in science will come not just from faster computers, but from minds that know how to wield them wisely.