Maximilian Riesenhuber, a professor of Neuroscience at the Georgetown University Medical Center in Washington, D.C., spoke about hierarchical processing involved in object recognition and deep learning in the brain as well as their implications for Artificial Intelligence (AI) technology on Sept. 25 in Krieger Hall.

During his talk, Riesenhuber discussed his research involving the neural mechanisms underlying object recognition as well as combining computational modeling with human behavioral electroencephalograms and functional magnetic resonance imaging experiments.

He outlined experiments involving the recognition of words by shape rather than spelling and the brain’s hierarchical processing from object-tuned units to task units.

Understanding these natural mechanisms, he believes, could help improve AIsystems in engineering, as they lagged behind the brain in flexibility and robustness. The News-Letter had the opportunity to interview Riesenhuber after his talk.

The News-Letter: What inspired you to pursue research about learning?

Maximilian Riesenhuber: Arguably, learning is key to understanding intelligent behavior. As my former mentor Tomaso Poggio put it, “humans are the least hardwired beings on earth.” Learning is crucial for survival — to distinguish friend from foe, food from non-food and other essentials — but it’s also foundational for human culture, to do science, etc.

We constantly learn from others, from our environment (especially when that environment is Hopkins – lots to learn there). And thanks to research in cognitive science and cognitive neuroscience, we are not only understanding better how the human mind and brain learn, but also how these insights can be translated into facilitating learning, for example, in education.

N-L: What kind of impact do you think your findings might have on technology in the future, especially concerning AI?

MR: The current “deep learning” success in AI has drawn heavily from insights in neuroscience, and as we are understanding better how the brain learns, in particular how the brain’s processing architecture can allow learning with much more flexibility and efficiency than current schemes, it should likewise help inspire more powerful learning algorithms for AI, that, for example, are better at leveraging prior learning to learn new concepts, that can learn from fewer examples, or that can better deal with real-world variability.

N-L: Why do you believe somatosensation is the next frontier for research in the field?

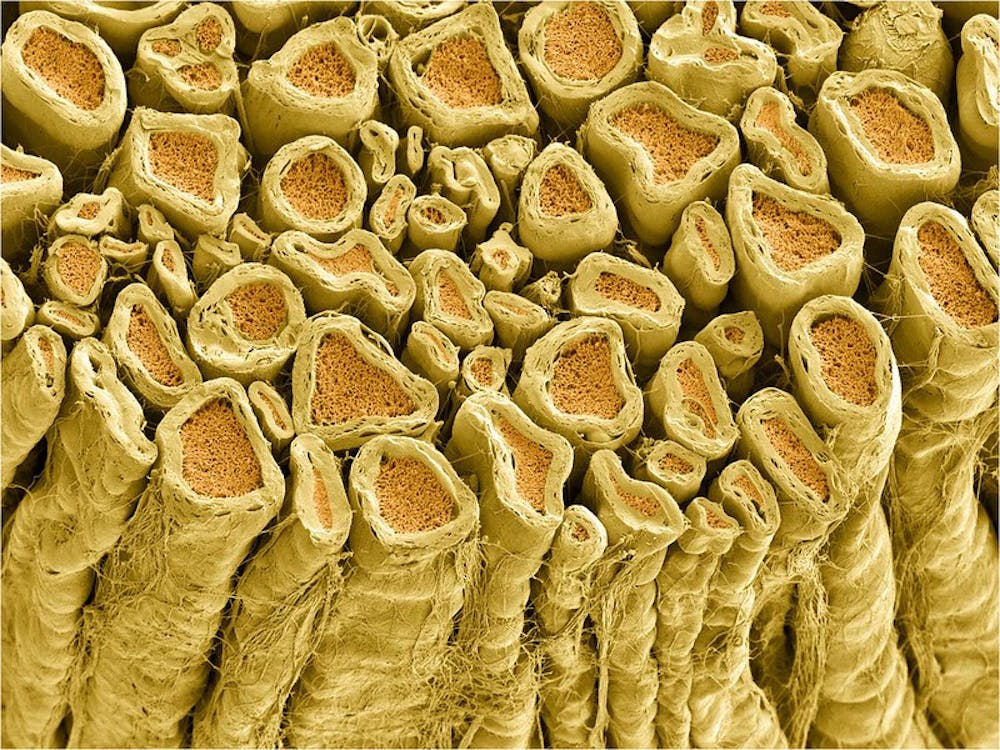

MR: It’s really an under-explored sense. We know a lot about vision and audition because they are comparatively easy to study. Somatosensation is more difficult in that respect.

On the other hand, your skin is your biggest receptor, and we know that we can do amazing things with our tactile sense – just think of Braille reading. Understanding touch is also interesting from an applied point of view, as it’s a sensory channel that’s not used much in current user interfaces.

But this is changing as people are realizing the potential of transmitting information through touch, with new devices like the Apple watch or even dedicated devices like the Moment from Somatic Labs.

Maximilian Riesenhuber, who has received several awards, including an NSF CAREER Award and a McDonnell-Pew Award in Cognitive Neuroscience, hopes to investigate somatosensory learning in the future.

Please note All comments are eligible for publication in The News-Letter.